Qualitative Analysis of Google Translate across 108 Languages

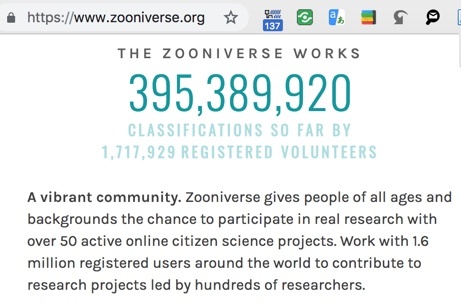

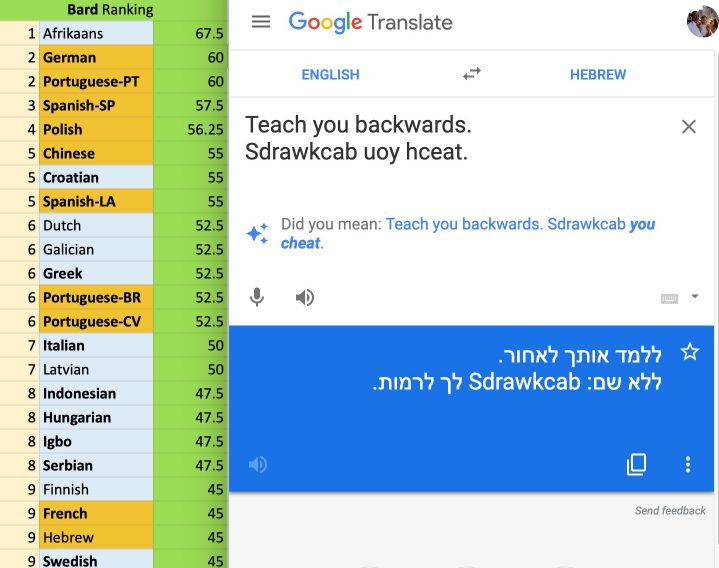

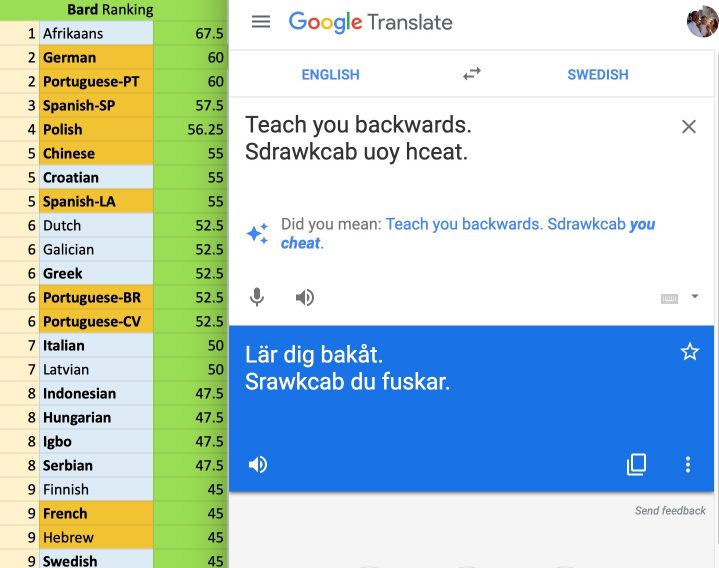

The notion that Google has mastered universal translation is built on several prominent myths. These myths feed stories that you may have seen in the press, or even heard from Google itself: earbuds that can translate 40 languages in nearly real time, underlying artificial intelligence that can teach itself any language on earth, neural networks that translate words with better-than-human accuracy, executives saying their system is “near-perfect for certain language pairs”, shining improvements from user contributions. This chapter inspects the myths, looking at what has been said versus what my research shows Google Translate (GT) has accomplished. The next chapter examines the finite limits to what GT can ever hope to accomplish using its current methods.

To make use of the ongoing efforts the author directs to build precision dictionary and translation tools among myriad languages, and to play games that help grow the data for your language, please visit the KamusiGOLD (Global Online Living Dictionary) website and download the free and ad-free KamusiHere! mobile app for ios (http://kamu.si/ios-here) and Android (http://kamu.si/android-here). How much did you learn from Teach You Backwards? Your appreciation is appreciated!:

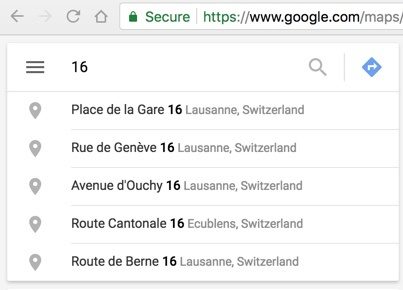

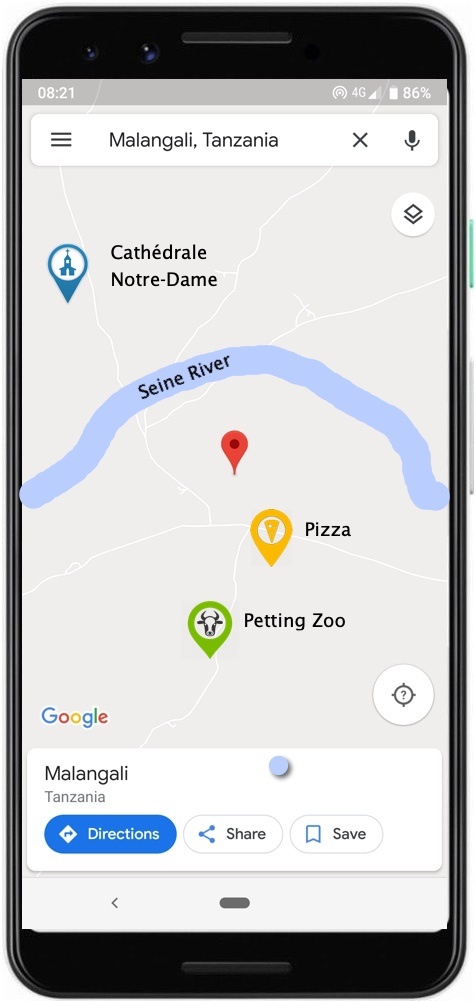

Picture 17.1: Google Maps for rural Tanzania, with about as much information, I presume, as Stanley had on his way through to find Livingstone in 1871. Detailed 1:50,000 topographic maps, produced since 1967 by the Tanzania Ministry of Lands, can easily be found and digitized from places such as Yale’s Sterling Memorial Library Map Collection.

I should preface this discussion with a note about Google as a corporation. People often view corporations in binary terms, as forces for good or forces for evil. I view Google as a disparate group of nearly 100,000 people, each doing their best on their particular project. Images, Maps, Search, self-driving cars, Android, Translate – these are all different things, all earnest efforts, and none directed by a cackling overlord in a mountain lair. Google has become huge and powerful not because it has the best solution for every market, but because it has a brilliant business model that pumps in cash that it can use to probe ever more ventures.

The corporation itself, founded in September 1998, turned 21 (the age when one is legally adult enough to drink alcohol in the US) just five days before the release of this web-book. It is not omniscient. It has failed in many efforts, such as social media, and been half-hearted in others, such as the perpetually limited functionality of Google Docs versus more robust office software. Some of its practices have seriously broken laws in dozens of countries, with billions of dollars in penalties for doing things wrong. Each thing that the corporation produces deserves to be judged on its own merits.

Google Maps for Paris is amazing. Google Maps for the area where I’ve lived in Tanzania is pathetic, as shown in Picture 17.1. While in no way reflecting badly on the Maps engineer diligently writing code in Zurich, Maps executives long ago made a decision that African villages do not have big enough markets to justify the product at the same quality they produce for Europe (87 of the world’s ~200 nations have been “largely mapped” to the Street View level); they will lend camera equipment to volunteer visual cartographers and compensate them by offering, says a product manager, “a platform to host gigabytes and terabytes of imagery and publish it to the entire world, absolutely for free,” but, as a geography professor states, “Google Maps is not a public service. Google Maps is a product from a company, and things are included and excluded based on the company’s needs” .

When the company produces something great, they deserve plaudits. When they produce something wretched, that should be known. Nobody anointed Google as master of 108 languages – the company hired some engineers and some people with linguistic knowledge, assembled some data, and presented a product to the world. The point of this study is to figure out what is good in GT and what is wretched, and what can be done by Google or others to overcome the problems this knowledge exposes. In the interests of transparency, you should know that I have consulted with Google regarding African languages, I use a Google Pixel telephone, produced this web-book on their Chrome browser, communicated with the hundreds of people involved in this project using Gmail, distribute the empirical data from this study using Google Docs, and in many other ways benefit from and appreciate products they create. That does not mean, however, that I should hold back from investigating whether their offerings for languages from Afrikaans to Zulu live up to their promises, and for praising their efforts for the former (12.5% failure rate) while decrying as fraudulent (70% failure rate) their efforts for the latter. For people to know what they are getting when they use Google Translate, it is essential to examine where they produce results like Google Maps produces for Paris, where they merely pin a red dot on a grey background, and where they break laws in the science of translation. This study is meant to judge the quality of the translation service Google claims to provide, putting to the test their new corporate slogan, “Do the right thing“. It takes no position on whether the corporation lives up to its old motto, “Don’t be evil“.

The Dangers of Bad Translation1

Before looking at the results of the study, we need to ask why translation matters. Here I might be speaking especially to Americans, who are prone to seeing translation as an afterthought – if you just speak English louder, foreigners will understand what you are saying, and doesn’t everyone in the world know some English these days? While it might sound like a caricature, indifference to other languages pervades police departments, hospitals, schools, philanthropies, and most other walks of American life, to the point that hostility to anything but English is a common feature of the political landscape.

Elsewhere, Europe suffers from Split Personality Disorder, with Dr. Jekyll investing billions of euros for translation among languages on the continent as vital to peace and trade, but Mr. Hyde assuming that the rest of the world should fall into line behind English, French, and Portuguese. Asian countries tend to see translation with English as important for international engagement, but translation with other languages as beyond the pale of where to invest time and money – for example, the papers at the annual Asian Association for Lexicography conferences are usually about monolingual dictionaries or bilingual dictionaries vis-a-vis English. African countries, conversely, see multilingual translation as important, not just with the colonial souvenir languages but among languages on the continent, but have very few resources to put toward the cause and are resigned toward making do with the technologies that external developers such as Google see fit to produce. (I say all this based on years of engagement on all four continents mentioned. Please read Benjamin and watch Part 1 and Part 2 of an academic workshop presentation.)

Despite these attitudes, though, migration and globalization result in millions of encounters every day where people face unpredictable translation needs – Japanese businesses with Vietnamese suppliers, refugees, workers on China’s Belt and Road Initiative, Africans seeking opportunities to move, study, or trade, Estonian tourists in the Peruvian highlands. Bad translations for tourists might be inconsequential, until they have a medical crisis. Bad translations for secondary students in Africa often mean failure for the international exams that they must take to qualify for university.

I discuss more real-world situations briefly in the embedded TEDx talk “Silenced by Language”, and expound in more detail in the bulleted paragraphs that follow, the point being that translation is not esoteric entertainment, but a vital component of communications in the modern world. It is important that the words that people believe are translations actually render the intent of what is being translated.

You expect that a product will substantially live up to the claims on its label. A badminton racket should not allow a birdie to pass through its holes. A deodorant that you apply in the morning should keep you from smelling like a goat by the time you come home. You probably do not expect a “72 hour” deodorant to actually last three days, but you would consider six hours to be a failure and 24 hours a minimum standard for usability. Unfortunately, the product shown in Picture 18 lasts not even 6 hours on a hot summer day that includes a short bike ride, and barely a full day in autumn chill without any notable sweat-making event; I, for one, will not buy it in the future. Nor will I buy sports equipment again from the superstore that sold the racket shown in Picture 17, which frequently traps the birdie between its strings instead of rebounding it into the air. The extent to which GT lives up to its label as a translator, versus the measurable rate at which it leaves you bleating like a goat, is important, because translations often have consequences. Some real examples:

-

- When Facebook mistranslated “يصبحهم”, the caption on a selfie a Palestinian man posted leaning against a bulldozer, as “attack them” in Hebrew, or “hurt them” in English, instead of “Good Morning”, the man was arrested and interrogated for several hours . (I did not test translations of Arabic or any other language on Facebook, but my personal experience trying to read Arabic posts on the FB walls of friends from the region has always maxed out at Tarzan, transmitting enough of the gist for an English speaker to get the general idea of the original post.)

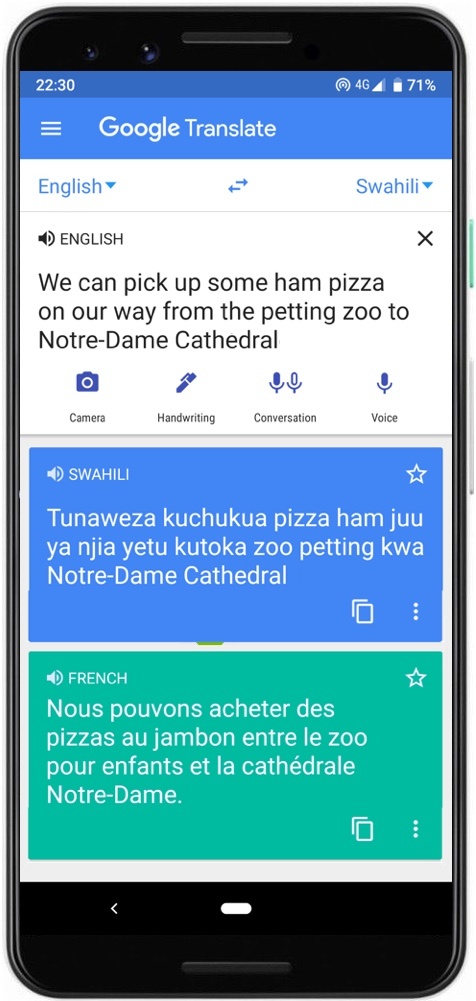

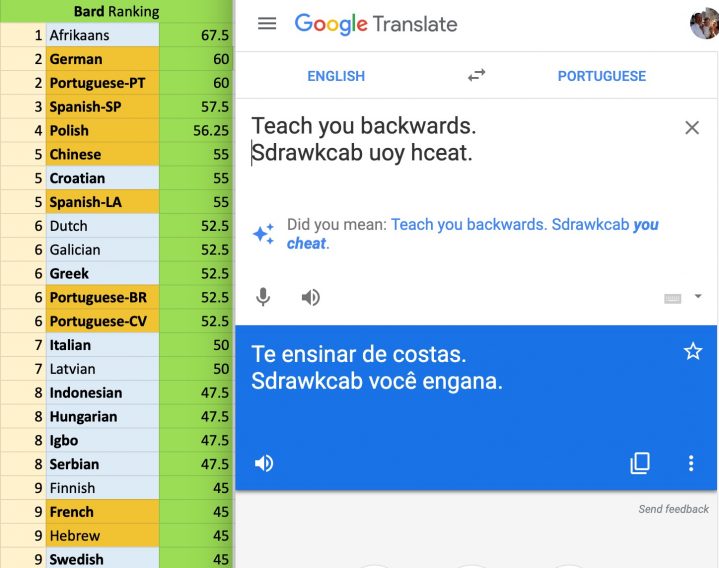

- When a mother and her son seeking asylum turned themselves in to the US Border Patrol, declaring that they had a fear of returning to their home country of Brazil, an agent used Google Translate to explain, as the little boy erupted in tears, that because she had not presented herself at an official port of entry, she had entered Trump-era America illegally, so she would go to jail, and he would go to a shelter. (Portuguese ranked in 3rd place in my tests from English at the Tarzan level.) It is not clear what either the mother or son understood before the boy watched his mother being handcuffed and he was abducted by the Trump administration, not to see her again for a month . Because many of the words that are generated by GT in Portuguese are, as found by TYB, certifiably fake data, it is almost certain that some or all of the verbiage from the uniformed kidnappers was incomprehensible, or reversed the speaker’s intent. A human translator would probably be the only legal way to make sure that the family’s rights were protected, under either US asylum law or the 1951 UN Refugee Convention. In 2019, Google declined to respond to a ProPublica investigation of the use of its services in immigration cases. However, rather than acknowledge evidence that their services were causing debilitating miscommunications in official proceedings, when Reuters asked a Google spokesperson about concerns over the use of machine translation in asylum cases in 2023, they were told that the tool underwent strict quality controls, and pointed out that it was offered free of charge (as though that were a justification – we didn’t charge for the spoiled food, so we are not responsible for the food poisoning). “We rigorously train and test our systems to ensure each of the 133 languages we support meets a high standard for translation quality,” the spokesperson said.

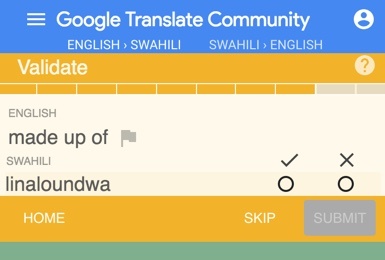

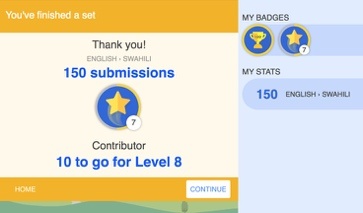

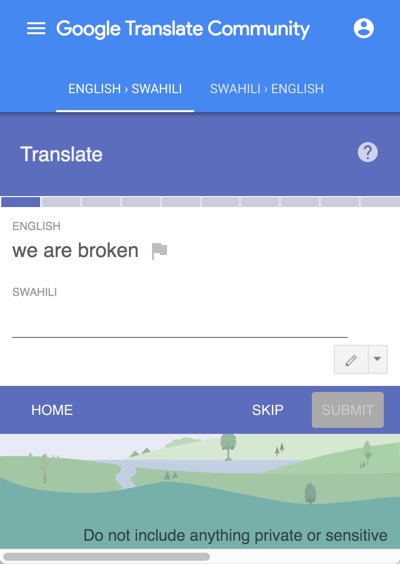

- Developers put enormous time and effort into preparing their Android apps to meet the requirements to appear in the Google Play store, including writing careful descriptions of their products. Google then automatically translates the descriptive content for each local market. When, after months of work, the Kamusi Here app (http://kamu.si/here) was uploaded to Google Play, we soon received mail from Romania about the incoherent product description – especially embarrassing for a product that was promising precision translation from Romanian (tied for 10th place) to dozens of other languages.

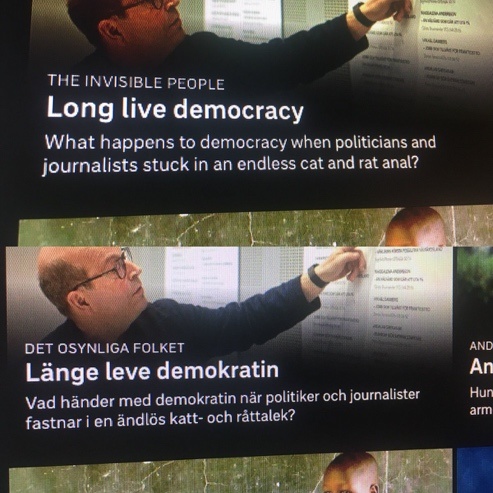

- Picture 19 shows an example of GT used in a Swedish business context. While the consequences of “an endless cat and rat anal” sound more painful than they probably are, many corporations utilize GT instead of paying professional translators, with serious ramifications for incomprehensible or patently incorrect text on the target side. An international conglomerate that owns media companies with archives of several million articles in French (French and Swedish tied for 9th) recently inquired about using GT to translate that content for its other markets, because the CEO believed Google’s assertions about the service’s capabilities; such an investment would necessarily result in a product that would be widely ridiculed and rarely purchased.

Picture 19: GT used from Swedish to English on the site of Sweden’s largest public service television company: https://www.svt.se/lange-leve-demokratin/

-

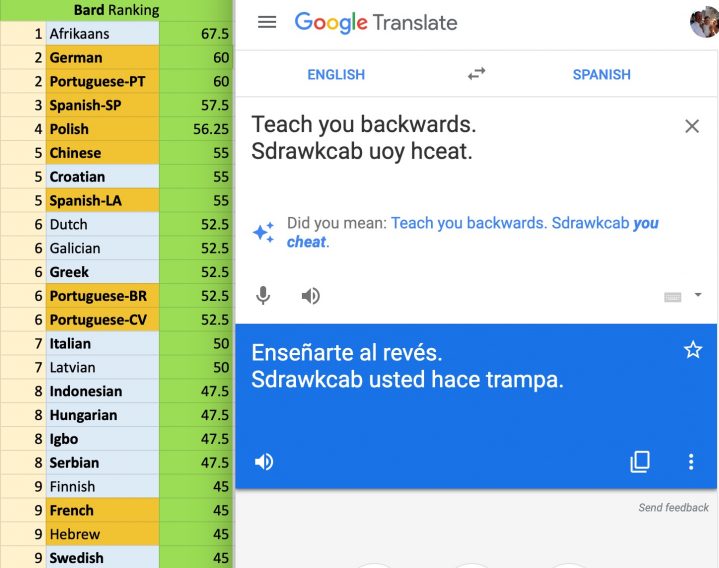

- The first Spanish-language version of the Obamacare website was apparently created via MT, leaving millions of Spanish-speaking Americans mystified when they attempted to sign up for government-mandated health care . (I cannot verify whether GT was the service used. The Spanish-language site has presumably been professionally translated by actual people since the initial fiasco. Spanish remains the only language for which healthcare.gov has been localized. Spanish ranked among the top 4 languages for both Bard and Tarzan scores in my tests.)

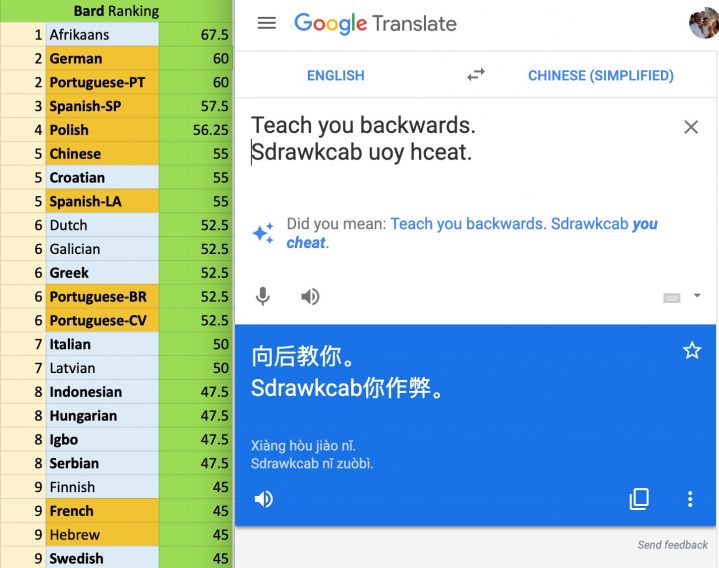

- Reports are legion of GT being used in patient/doctor interactions, with medical staff unaware that the service is not well trained in the health domain. Poor medical translations lead to overlooked symptoms, incorrect diagnoses, medications, and procedures, increased suffering, and even death. As a small example, the question in an emergency room, “How many shots did you take?” would lead to very different interventions depending on whether “shots” was automatically translated in the sense of photographs, drug injections, alcohol, or bullets. A pregnant woman looking for the delivery room at a hospital in Germany, relying on GT, would be directed to the mailroom instead (see Picture 46).Immigrants and travelers around the world interact with health services where they do not speak the language. Khoong, Steinbrook and Fernandez found that 8% of discharge instructions translated from English to Chinese and 2% from English to Spanish at a hospital in San Francisco had the potential for “significant harm” – Chinese being among the top 7 languages in the Bard rankings and among the top 12 for Tarzan scores. When a crisis arose after 72 hours of labor during the birth of my own daughter in a hospital in Switzerland, we were fortunate that the head doctor directed a member of the medical team with an Irish grandfather to switch to English, as neither our level of French at the time nor GT had the capacity to maneuver cogently through the situation. A study in the British Medical Journal that was skewed in favor of languages that performed best in our tests (European languages with the most data and financial resources) found:

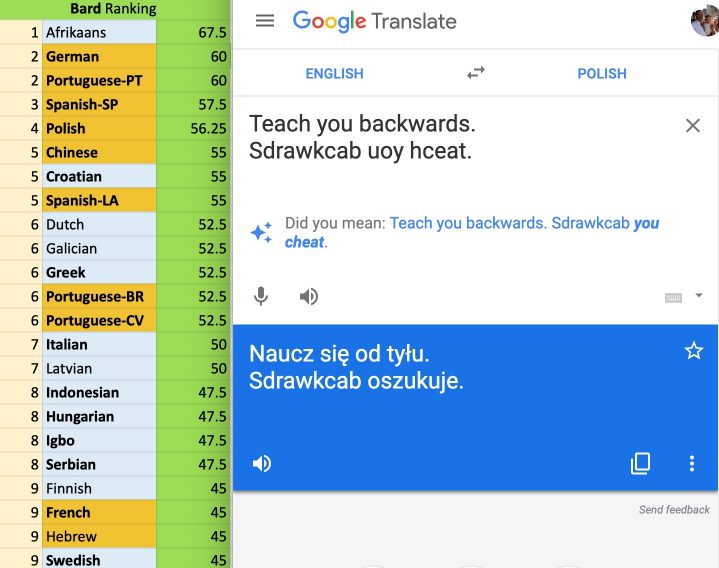

Ten medical phrases were evaluated in 26 languages (8 Western European, 5 Eastern European, 11 Asian, and 2 African), giving 260 translated phrases. Of the total translations, 150 (57.7%) were correct while 110 (42.3%) were wrong. African languages scored lowest (45% correct), followed by Asian languages (46%), Eastern European next with 62%, and Western European languages were most accurate at 74%. The medical phrase that was best translated across all languages was “Your husband has the opportunity to donate his organs” (88.5%), while “Your child has been fitting” was translated accurately in only 7.7%. Swahili scored lowest with only 10% correct, while Portuguese scored highest at 90%. There were some serious errors. For instance, “Your child is fitting” translated in Swahili to “Your child is dead.” In Polish “Your husband has the opportunity to donate his organs” translated to “Your husband can donate his tools.” In Marathi “Your husband had a cardiac arrest” translated to “Your husband had an imprisonment of heart.” “Your wife needs to be ventilated” in Bengali translated to “Your wife wind movement needed.”

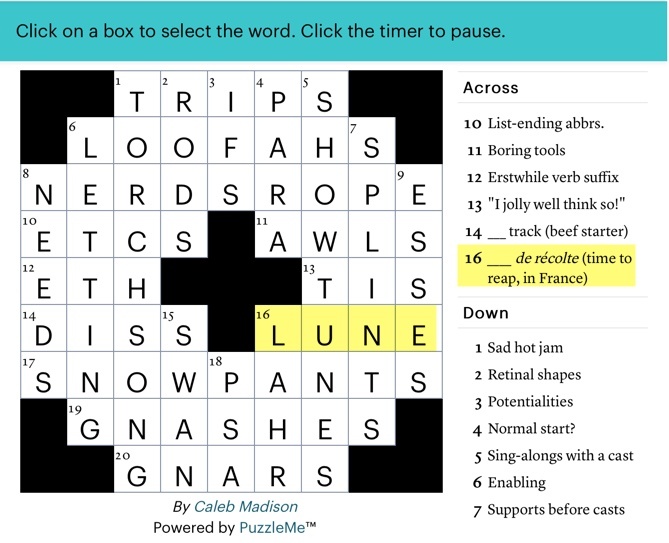

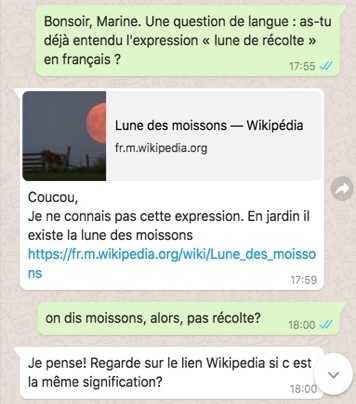

Picture 20: A clue in The Atlantic Crossword that came from GT, not actual French (16 November, 2018)

-

- The Fourth Amendment of the US Constitution prohibits searches and seizures without a warrant. A person who agrees to a warrantless search must provide consent knowingly, freely, and voluntarily. After a police officer used GT on a traffic stop to request to search a car, resulting in the discovery of several kilos of drugs, a Kansas court found that GTs “literal but nonsensical” interpretations changed the meaning of the officer’s questions, and therefore the defendant’s consent to the search couldn’t really have been knowing . Nevertheless, the top judge of England and Wales has stated, “I have little doubt that within a few years high quality simultaneous translation will be available and see the end of interpreters” (Welsh ranked 8th). If courts around the world come to accept that MT provides adequate legal understanding, miscarriages of justice will inevitably ensue.

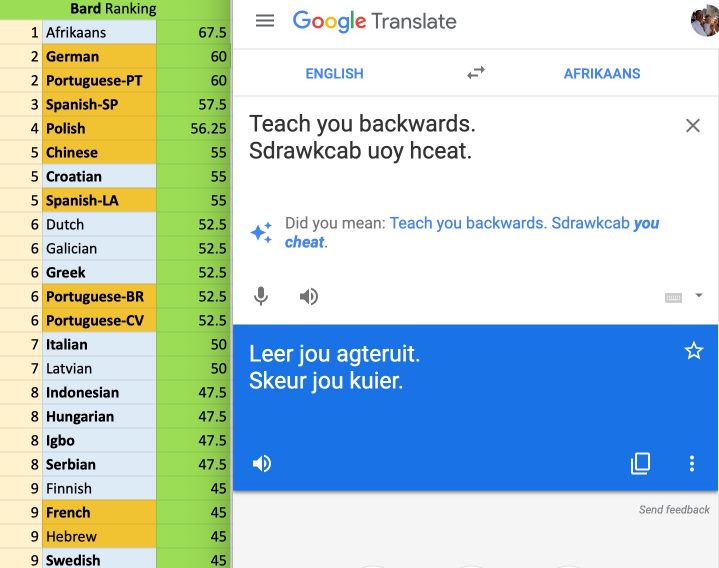

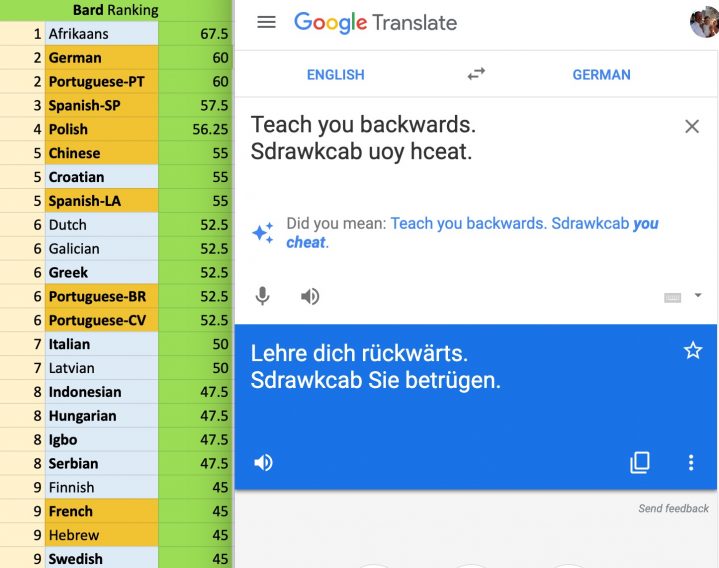

- Nearly 90% of students learning languages in one US university report that they commonly use online translators as an aid in their studies) . When used cleverly to home in on potential vocabulary and sentence structure, GT and its cohort can help with language learning. However, many students use GT the way they use a calculator, to perform rote computations and accept the output uncritically. 89% of 900 students surveyed at another US university reported using GT as a dictionary, to look up individual words and party terms . GT is not a dictionary. Its results for single words are statistical best guesses with a high chance of failure, and its results for party terms are generally catastrophic. A calculator will always return correct results: 2+2=4. This is patently not the case for GT, where 2+2=i in the proportions I measured for each language. The consequence is that students learn and hand in assignments using a simulacrum of their foreign language rather than the language itself.

-

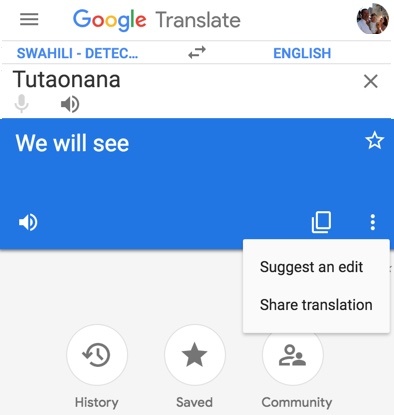

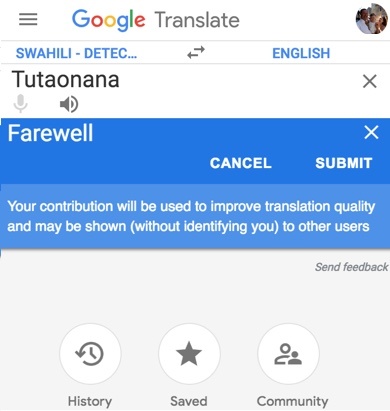

- GT is blithely used as a go-to source for translations that end up in publications or other professional contexts. An example is shown in Picture 20, where the crossword editor of The Atlantic, a prestigious US publication, ran with GT’s rendition of “harvest moon” in French as “lune de récolte”, instead of “lune des moissons” as expressed by actual French speakers.2 It is true that lune=moon and récolte=harvest, but putting those two together as a translation of the English party term is a fake fact, an invention by a machine. GT is the only place on the Internet that makes the pairing posed by The Atlantic, but their puzzle creator did not know that because, unaware that the MUSA Make Up Stuff Algorithm is designed to fabricate a cosmetic response when the data falls short, he trusted GT when he made his clue. Then (as kindly confirmed by their fact checking department), the fact was checked using GT, and of course this alternate reality creation confirmed itself as French. Especially for party terms, proper translation often is not possible to obtain using standard search techniques. For example, Linguee does not have a large repertoire of English source documents containing “harvest moon” that have been translated to French, even proposing texts involving UN Secretary General Ban Ki-moon for consideration. “Harvest moon” is a subsection of “full moon” in the English Wikipedia, from where the interwiki link to French reaches the general concept of “pleine lune” – there is no pathway for a computer to use Wikipedia to infer a bridge between the concept as expressed in English and the way it expressed in any other language. Nor does the term appear in a battery of print or online dictionaries. Companies frequently consider GT outputs to be facts because they come, as in Picture 21, with an almost trillion dollar imprimatur that has surface visual resemblances to the outputs of deep lexicographical research at Oxford or Larousse, rather than, as in Picture 22, verifying translations with actual speakers. Human translations can be time-consuming and costly, but the consequences of machine mistranslations, in contexts more significant than the daily crossword, can be more so.

- “Latino outreach or Google Translate? 2020 Dems bungle Spanish websites” . The headline says a lot, but it is worth giving the full Politico article a read. Several contenders to be the Democratic presidential nominee in 2020 were found to have used GT for the Spanish-language versions of their websites. In the three cases where Rodriguez shows side-by-side translations from GT and the final web version, only Cory Booker followed the recommended procedure of using the MT service as a convenient starting point from which to embark on radical human post-editing with a knowledgeable speaker (if Booker’s team used GT at all, because the similarities are words a human translator would also likely have chosen). Julián Castro at most changed four words in the passage Politico analyzed (or GT was in a different mood when his translation was run versus when Rodriguez tried it, as often happens), and Amy Klobuchar did a pure copy-and-paste. Castro got lucky with a high-Bard output, while Klobuchar’s text literally stranded her in the middle of the Mississippi River. What is noteworthy is that these are serious political campaigns seeking to woo the votes of a substantial part of their electorate, and raising millions of dollars to spend on persuasion. They, or their staff, went to GT with the belief that the output was human caliber, and that finding a qualified volunteer or investing a small amount for a professional translator was an unnecessary step. Rodriguez’s reporting prompted most of the campaigns to abandon GT in favor of humans going forward, but botched outreach courtesy of GT will inevitably come back around for future candidates.

- And then there’s this:3

Why is #googletranslate doing me like this.

This is not what I said ..🤦🏿♂️ pic.twitter.com/OXjF3vwGHS— 沃爾特 (@a1kindoe) September 20, 2019

- And this (Maltese tied for 9th), which Air Malta quickly fixed once they learned they were selling seats to non-existent cities: https://lovinmalta.com/humour/now-boarding-to-sabih-air-maltas-translate-mishap-creates-a-bunch-of-new-european-cities/

- And this (Galician tied for 9th): https://www.thelocal.es/20151102/galicia-celebrates-its-annual-clitoris-festival-thanks-to-google-translate

- And the video below. Airline safety instructions may be tedious, but they are important. If you ever fly Kenya Airways and see a placard regarding “Life Vest Under Center Armrest” in Swahili, please feel secure that considerable effort went into ensuring that every piece of signage communicated the exact intent.

The examples above show that translation is often a deadly serious activity with substantial consequences in the real world. Many of the puzzles in MT do not harm the lives of computer scientists or journalists if the solutions are imperfect, however, so the experiments that raise the bar are venerated as research success even though the outcome fails its consumers at the rates I have measured. An error that reverses the meaning of a randomly chosen text might be just one word in an otherwise Bard-level translation, and affects GT engineers as little as the small software error in the stabilization system of the 737 MAX affected the Boeing executives who swore that travelers had nothing to fear after the first crash of their jet, in Indonesia. It is entirely conceivable that an airplane could crash someday because a maintenance worker followed an instruction that was erroneously translated by GT, similar to a 1983 near disaster when ground crew read the number in their fueling instructions as lbs instead of kgs during Air Canada’s transition to the metric system.

The public has a confidence in MT, particularly in GT, that has not been earned. This confidence is built on several foundational myths:

Myth 1: Artificial Intelligence Solves Machine Translation4

AI is the big buzz in computer science these days, eclipsing the enthusiasm for Big Data earlier in the decade. Some recent headlines about AI and machine learning exhibit the current hype:

- How to solve homelessness with Artificial Intelligence

- Artificial Intelligence can help in fight against human trafficking

- Machine learning is making pesto even more delicious

- What can Artificial Intelligence do about our food waste?

- How machine learning and the Internet of Things can help the world’s poorest people

- How Artificial Intelligence can save our planet

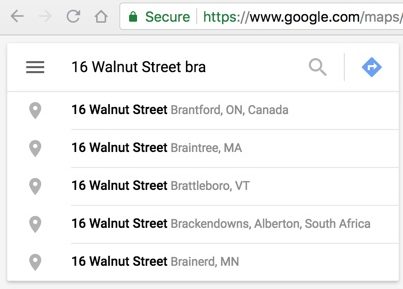

Students looking for internships regularly approach Kamusi Labs in hope of a project that combines AI and NLP. I must always point them in other directions because, at this moment and for the foreseeable future, AI is impossible for most MT and other NLP tasks. I hear your shrieks: many interesting advances have already been made! Yes, I reply, if you constrain your scope to English and a few other investment-grade languages – and even then, impressive linguistic feats cannot crash the barrier of meaning . For most languages, and even for a lot of activity relating to English nuance, there is no scope for AI because there is not enough data on which a machine can act – regardless of headlines such as this from MIT Technology Review : Artificial Intelligence Can Translate Languages Without a Dictionary.

Additionally, the phrase “machine learning” as it is usually applied to MT is a misnomer. In most contexts, “learning” implies that knowledge has been ascertained, whereas in MT the data has merely been inferred. No teacher is standing by to either guide or correct the inferences. Consider: my nine-year-old does not have English instruction at her Swiss public school, so she learns the written language by reading books to me at home every evening. The only way for this to work, though, is for us to sit on the couch together so that I can help her through tough words like “though” and “through” and “tough“.5 Without a teacher at her side, she would make guesses at such words that would often be wrong, but that she would lock into her head as fact – she’ll have “learned” the wrong thing. I can think of many things that I “learned” wrongly by inference – thank you to my grandmother, an English teacher, for her yeoman’s work catching my writing errors all the way through graduate school, such as using “reticence” when I meant “reluctance”. We can feel empathetic embarrassment for the narrator on This American Life6 for his assumption that “banal” does not rhyme with the word it most resembles, keeping in mind the aphorism that “assume” makes an ASS out of U and ME; we can hope he learned from someone gently correcting him after his broadcast, but also fear that some listeners learned the mispronunciation from hearing his bungled English.

Yet, when AI computes associations between languages that are presented as data without any human verification, data science writers tout it as gained knowledge, in confidently written reviews such as “Machine Learning Translation and the Google Translate Algorithm” . Consider: in the TYB test, GT translated “out on the town” into Dhivehi as “ރަށުގެ މައްޗަށް ނުކުމެއްޖެއެވެ”. Our evaluator gave that a C rating, completely wrong, and translated it back to English as the word-slaw “came out top of the island”. GT’s reverse translation back to English, though, is “out on the town”, which has nothing to do with what a Dhivehi speaker sees or infers. Obviously, GT taught itself from its own faulty translation (perhaps when I ran the phrase to prepare the evaluation document) – the computation decided “out on the town” is ރަށުގެ މައްޗަށް ނުކުމެއްޖެއެވެ , so therefore ރަށުގެ މައްޗަށް ނުކުމެއްޖެއެވެ is “out on the town”, q.e.d., machine learning in action. Unless you are one of 340,000 Maldivians, you don’t speak Dhivehi, while GT has “learned” the translation. Who are you to question Google?

As you continue reading, please keep in mind any indigenous African language of your choosing. Africa is home to roughly 2000 languages, spoken by approximately 1.3 billion people.7 About 5% of the continent is literate in one of the colonial souvenir languages (English, French, or Portuguese), with no indication of that number increasing significantly for new generations. A few languages have contemporary or historical written sources that could become viable monolingual digital corpora at the service of NLP (limited corpora exist for Swahili8 and a few other languages in forms that could be exploited through payment or research agreements), but none have substantial digitized parallel data with any other language. 12 African languages have words in GT: Afrikaans (a sibling of Dutch and the best-scoring language globally in TYB tests), Arabic (which straddles Africa and Asia and had a 60% failure rate), and 10 languages that originated on the continent: Amharic (60% failure), Chichewa (70% failure), Hausa (65% failure), Igbo (40% failure), Malagasy (60% failure, which makes it better than the 5.5 million pellets of robot-produced space junk that is the Malagasy Wiktionary), Shona (70% failure), Swahili (75% failure), Xhosa (77.5% failure), Yoruba (70% failure), and Zulu (70% failure). It would be racist to posit that Africans speak languages that are so much more complicated than those that have already experienced NLP advances, that it would be too difficult to extend systems originally engineered for the more feeble minds of Europe. It would be racist in the other direction to assert that Africans do not deserve to be included in global knowledge and technology systems because they do not speak languages that wealthy funders choose to invest in. Your mission as you read, then, is to envision how your chosen African language can effectively be incorporated within the realm of MT, so that its speakers can communicate with their African neighbors and with their international trading partners.

You may extend this mental exercise to any of the thousands of non-market languages of Asia, Austronesia, or the Americas. With around a quarter billion native speakers, Bengali is a good candidate. Hakka Chinese, one of China’s 299 languages, with 30 million speakers (more people than Australia and New Zealand combined), is another good example, as would be Quechua spoken by about 10 million people in South America. The exercise can also be brought to Europe, for example regarding around 7.5 million people who speak Albanian. Which of these languages is too complicated for ICT? Which people would you choose to exclude? If your answer to those questions is “none”, read on with an eye toward why current technologies have failed each of these languages, and what among existing or realistic technologies can be implemented for them to reach parity within the computational realm.

Consider these scenarios:

1. A language with virtually no digital presence – the majority of the world’s languages.9 The first step in digitizing thousands of languages is collecting data about them: what are the expressions, what do they mean, and how do they function together? Without a large body of coherent data, a machine could not even perform basic operations on a language. For example, it cannot put all the words in alphabetical order if it does not have a list of words. Digital information for perhaps half the world’s languages consists solely of metadata – where it is spoken, how many speakers, language family – the minimal information that someone who knows something about linguistics has gathered sometime in the past 150-ish years to determine that Language X exists and is distinct from its neighbors. Machines cannot pluck data out of the ether. Surely you will agree that AI is impossible in this situation.

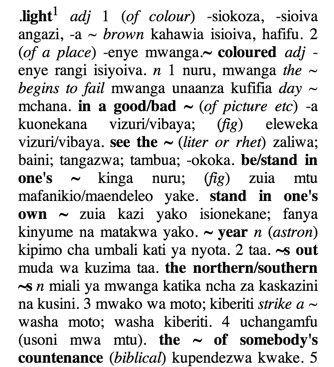

2. A language with some digital presence, but no coherent alignment to other knowledge sets.10 For example, the language might have a digitized dictionary, and that dictionary might indicate that the basic form of a word matches to an English word such as “light”. There is still no basis for a computer to infer whether that is light in weight, or in color, or in calories, or in seriousness. Nor could the machine begin to guess aspects such as plural forms or verb conjugations, much less the positions of words to form grammatical sentences. Most digitized linguistic data exists in isolated containers that, you will probably agree, AI does not have the basis to penetrate. As a human who has mastered languages and knows what research questions to ask, you could stare at someone else’s dictionary for weeks on end and gain no traction in conversing in their language. Although we might regard them with mystical talismanic power, computers have no magical ability to spin gold out of floss.

As a case study, consider how to treat the Sena language spoken by 1.5 million people in Malawi and Mozambique. Religious organizations have published an online Bible, and audio recordings of Bible stories. The Bible has been parsed into words that are listed by frequency, in files available at http://crubadan.org/languages/seh. A 62 page Portuguese-Sena print dictionary from 2008 is available in the SIL Archives in Dallas, Texas, and a 263 page Portuguese-Sena/ Sena-Portuguese print dictionary, completed before 1956, is available in the Graduate Institute of Applied Linguistics Library, also in Dallas. 15 articles about Sena are listed at http://www.language-archives.org/language/seh, including one from 1897 and one from 1900. The only study that is available in digitized form is a scan of a book chapter written in German and Portuguese from 1919, that you will want to look at to visualize the technical challenge of extracting operationalizable linguistic data or models.

You have before you the sum total of resources for Sena. You are now challenged to lay out a strategy to use AI to produce an MT engine for the language. You cannot do it. Neither can Larry Page11 and a phalanx of his engineers at Google. Again, AI is impossible in this situation.

Picture 24: Ambiguity that natural intelligence can slice through in an instant, but is opaque to AI.

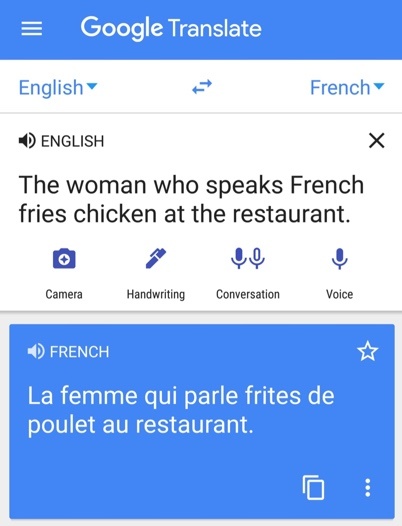

3.12 A language with considerable digital presence. Here we can begin to see the possibilities for the computer to make some high-level inferences. For example, with a large text corpus, a machine could learn how a language speaks of potatoes. Seeing that a language has baked, boiled, fried, or couch potatoes, and that chicken can also be baked, boiled, or fried, AI can even surmise the existence of couch chickens. However, unless there is a lot of parallel information, you should still agree that AI will be ill-equipped to fathom that “French fries” might or might not refer to deep-fried potatoes that have particular translation equivalents in other languages that have nothing to do with France (see Picture 24).

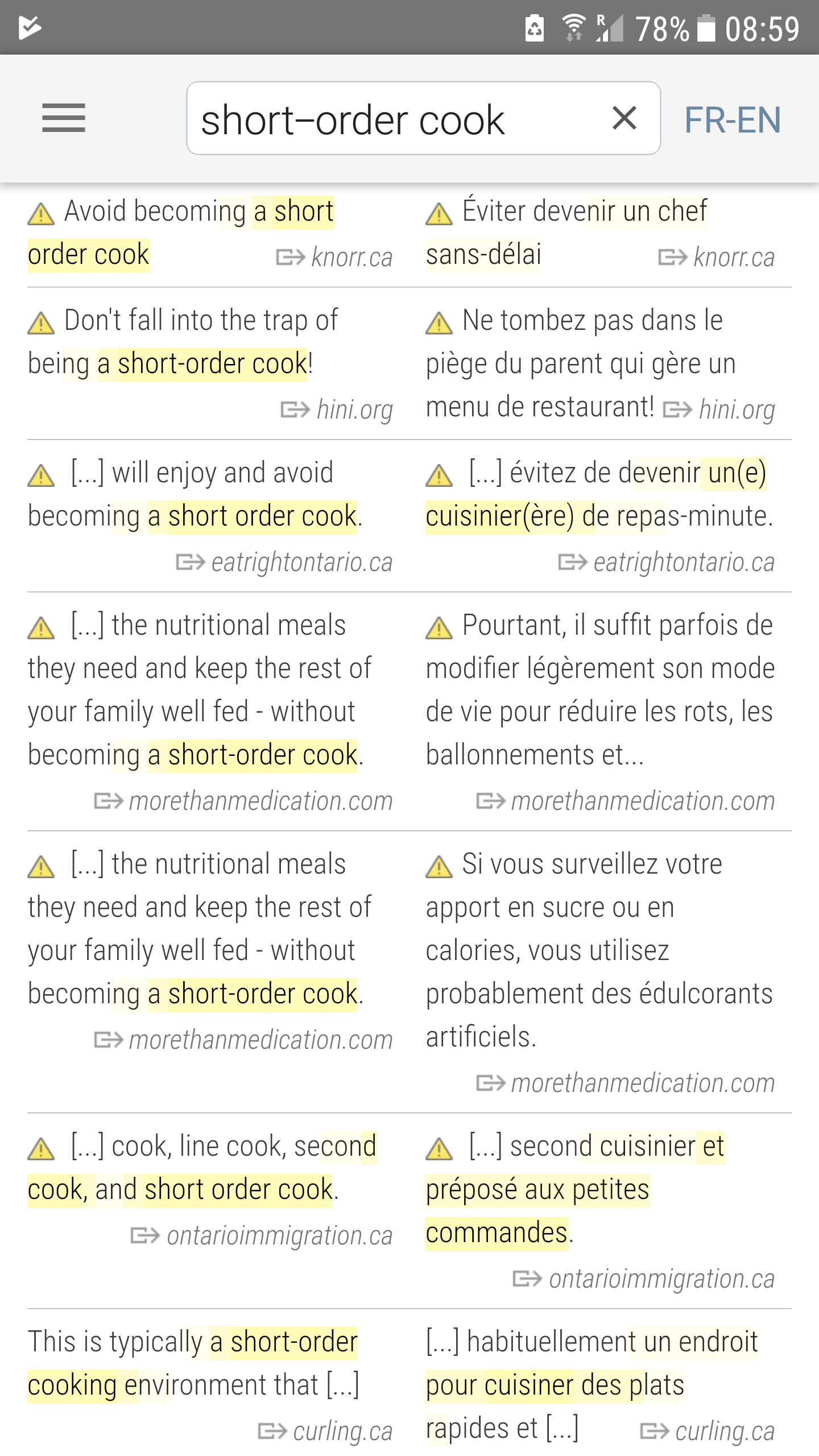

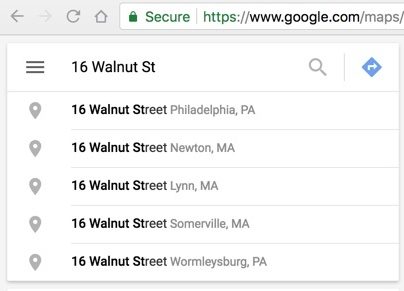

Parallel data opens doors for machines to learn patterns between languages. However, these patterns will always be limited to the training data available – direct sentence-aligned translations between two languages, or potentially a somewhat wider relational space with NMT. (The case of “zero-shot translation”, with nonsensical results , relies on two stages of direct translations, with the third (bridge) language removed after training.) The largest set of topically related multilingual data outside of the official European languages, Wikipedia, is entirely unsuited as a source for MT training. To glimpse inside the major parallel datasets on which MT is actually trained, spend some time trying words and phrases on Linguee.com. Compare the areas that Linguee highlights in yellow as translations from the source side to the target side, as shown in Picture 25. These are extremely useful when you filter with your natural intelligence, but are devilishly difficult for the machine to match with certainty, because:

Picture 25: Results for “short-order cook” in Linguee. The best translation is “cuisinier(ère) de repas-minute”. Notice (a) that translation occurs only one time, and (b) making the translation useable, in this case factoring around the parentheses for gender, would require human intervention. It is worthy of note that all of the valid translations come from Canadian sources, where greasy spoons are popular, and none from France, where a short-order cook would be summarily guillotined. Also, do not hire whoever was responsible for the translation on the second line.

- one source phrase might be translated in many ways (in a lot of ways, in a variety of ways, in multiple ways, varyingly) by different people across source documents

- current algorithms cannot identify most party terms, especially those that are separated (e.g., “cut corners” can be separated by one or many words: “Boeing executives wouldn’t let the engineers do their job and they cut those deadly corners on the 737 MAX 8.”13).

- the source phrase might be rare within the training corpus, without enough instances for a machine to detect patterns. This is especially true for:

- colloquial expressions, which might be used all the time by regular folks but are frowned on in the formal documents that enter the public record

- ironically, American English expressions, often missing because the most-translated texts come from official EU documents where UK English is standard. For example, people don’t “call an audible” when they change plans at the last moment in the UK, so the expression does not make it into parallel corpora, and (look yourself) GT translates the words devoid of the meaning of the expression in all languages

- non-English as the source. Although every language has its own set of complex expressions, only the most powerful are likely to be the source of widespread official translation. For example, all EU documents must be translated to Lithuanian, but they are likely to start out in English, French, or German; precious few documents start in Lithuanian for translation throughout the EU language set. If you can find a source of party terms for a non-investment language such as Kyrgyz, much less their translations to any other language, please sing about it in the comments section

- the machine can pick out the wrong elements on the target side as the translations

- polysemy: one word or phrase with several meanings

Using some test phrases that are evident to most native English speakers (e.g.: chicken tenders; short notice; hot under the collar; off to the races; mercy killing; top level domain; short-order cook), you can see in Linguee that current parallel corpus data is probably sufficient for AI to learn from human translations from English to French for mercy killing and top level domain, maybe for short notice, highly doubtful for chicken tenders (see Picture 45), and inconceivable for hot under the collar, off to the races, or short-order cook. AI can discover many interesting patterns that can be combined with other methods to advance translation where data is available (for example, short-order cook could be mined from Canada’s official Termium term bank), but it can only ever be a heart stent, not an entire artificial heart.

Swahili is a good case study of a language with a lot of digitized data. Kamusi has a database with rich lexical information (such as noun class designations) for more than 60,000 terms, as well as a detailed language model that has been programmed to parse any verb; unfortunately, funds for African languages are so dry that the data has been forced offline. TUKI , an even more extensive bi-directional bilingual dictionary between English and Swahili, from the University of Dar es Salaam, is available, but entries are bricks of text that can barely be interpreted by a knowledgeable bilingual human, much less by a machine (see Picture 26). An open monolingual Swahili corpus based on recent newspaper archives is being constructed at http://www.kiswahili-acalan.org, and an older corpus is available for research purposes, with difficulty, via https://www.kielipankki.fi/news/hcs-a-v2-in-korp. No parallel corpus exists with English, though one could probably be created as a graduate project using the digitized archives of the Tanzanian parliament and other well-translated government documents.

Picture 26: Partial entry for “light” from TUKI English-Swahili Dictionary.

Swahili has research institutes, a large print literature, an active online culture, a moderate investment in localization from Google and Microsoft, and crowdsourced localization by Facebook and Wikipedia (full disclosure, I have been involved in all four of those projects). Currently, GT and Bing both offer Swahili within their translation grid, but the results are only occasionally useable.

For example, for an article in the Mwananchi newspaper about prices for the crucial cashew crop, GT translates the fifteen occurrences of “korosho” (cashews) as riots, crisis, vendors, bugs, cats, cereal, cuts, raid, and foreign exchange, or leaves the term untranslated, but never once uses the word “cashew”. Meanwhile Bing recognizes the central word, and gives a passable rendition in English at the Tarzan level (one knows that farmers are facing insecurity selling their crop because of fluctuations in the international market) – but uproariously translates the word “jambo”, which has its major meaning in written and spoken Swahili as an issue, matter, or thing, as “hi”. ( “Jambo” is the tourist version of a complicated Swahili greeting based on negating the verb “kujambo” (to be unwell), which Kamusi log files confirm is a lead search term for first time visitors, and which Bing has evidently chosen to hard-code regardless of context.)14

Google claims that Swahili makes use of their neural network technology, but that technology is clearly mimicking the brain of some lesser species. In fact, it may be that the technology is actively delivering worse results, by finding words that occur in similar vector space; in tests using the word “light”, in cases where GT actually picks up the right sense, it delivers the Swahili equivalent of the antonym heavy when the context is weight (“light load” is rendered as a heavy load, “mzigo mzito”) and the antonym harsh when the context is gentle (“light breeze” is rendered as a harsh wind, “upepo mkali”). The question is, with proper attention and the digitized resources at hand, could AI make significant headway? To the extent that a clever algorithm was set to work finding patterns within the corpus, then calculated rule-based connections through the lexical database that links to English, a much smarter MT platform could emerge. Creating such a system, though, would demand a lot of human intervention – the computer is the tool, but that tool needs an artisan to craft with it. AI can aid MT for those few language pairs that have significant parallel data, but it is not a stand-alone solution.

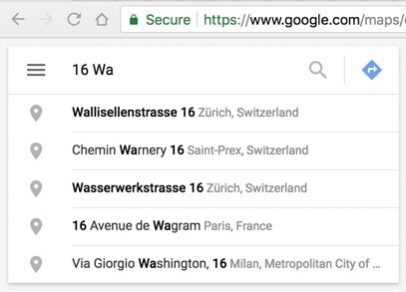

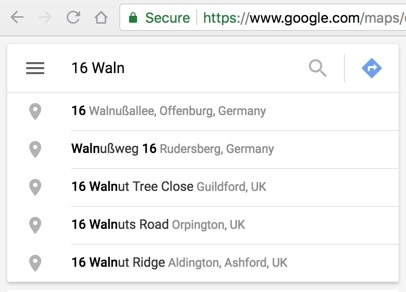

Picture 27: English predictions based on AI might include “doing”, “feeling”, and “today”, rather than the options captured in this image from an Android phone.

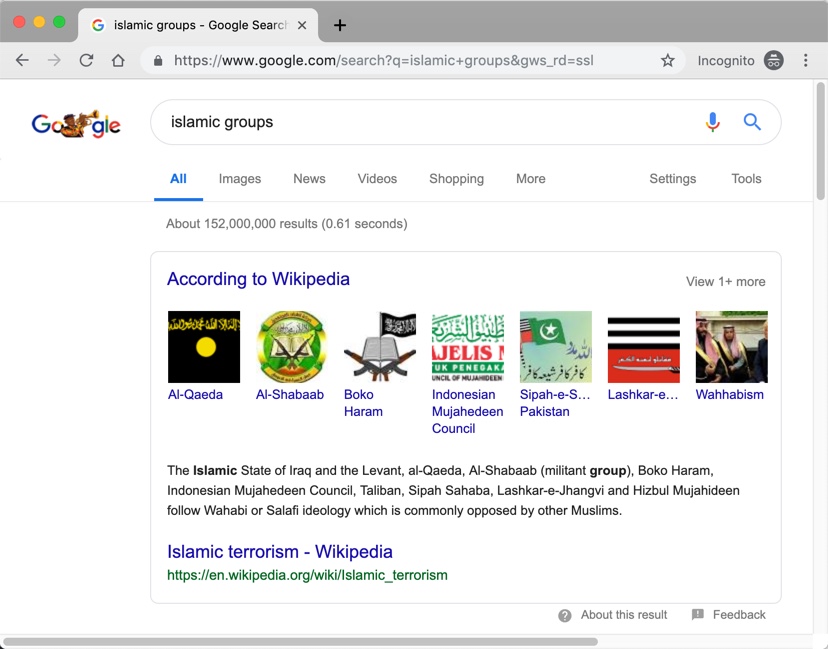

4. The best-resourced language.15 English provides many cautionary examples showing why you should be skeptical of the notion that AI is the cure for NLP. Think of all the ways you interact with machines in English that, after decades of intense research and development, do not yet work as envisioned. Speech recognition, for example, causes you cold sweats whenever you are asked to dictate your sixteen digit account number into a telephone, although in theory AI should be able to interpret sounds to the same extent that it can recognize images.16 Word processing software that learns from people should be able to flag when you have typed that someone is a good fiend rather than a good friend, and learn from millions of users repeatedly mistyping and correcting “langauge”. Auto-predict should know that “fly to” is likely to be followed by one of a limited set of cities, and “fly in the” will call for ointment or afternoon. Google combines language processing and some AI brilliantly in its English search – figuring out the relevant party terms, correcting typos, and combing your personal data to provide you results based on your movements, purchases, and perceived interests. Take it from their research leaders: “Bridging words and meaning — from turning search queries into relevant results to suggesting targeted keywords for advertisers — is Google’s core competency, and important for many other tasks in information retrieval and natural language processing” . Yet still, Google Search frequently misses; to find out about fruit by typing in “apple” you will need to do some serious scrolling, and be prepared for an ad hominem slur on 1.8 billion people if you seek to learn the difference between Shia and Sunni (Picture 28).

Picture 28. Google Search uses their NLP machine learning techniques to parse the user’s search term and give a phenomenally offensive first result for “Islamic groups” (4 April, 2019). Search results may vary depending on your location, your browser history, the processor’s mood, or whether someone at Google has seen this image and taken corrective action.

When it works – when you say “tell my brother I’ll be ten minutes late” and it sends an SMS with the right information to the right person – you feel as though yesterday’s science fiction has become today’s science fact. Yet you know enough to not really trust the technology at this stage in its development. You would be a fool not to double check that your message was sent to the right person with the right content. During a pause while writing this paragraph to send a WhatsApp message to someone that “I will be in U.S.”,17 the phone proposed that the next thought would be “district court”, an absurd prediction for most people other than an attorney or a member of Donald Trump’s criminal cabal. For AI to make actual intelligent predictions for your texting needs, your phone would merge billions of data points from the consenting universe of people sending messages, combined with your personal activity record, and recognize that “for Mary’s wedding” or “until the 30th” would be more appropriate follow-ons to “I will be in [place name]”. (See McCulloch for a nice gaze into autocomplete.)

Picture 28.1: The magic of Google Search is an interactive process between user and machine, teaming computation and natural intelligence to whittle toward relevance.

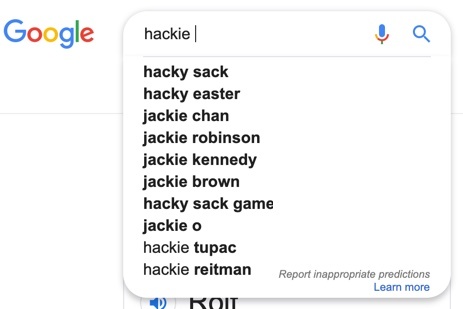

In fact, much of what seems like AI magic in Google Search is actually Google casting a wide net based on their huge database of previous searches, and using your personal knowledge of your personal desires for you to select the intelligent options from the many off-base ones also on offer. Examine Picture 28.1: Google knows that people who have searched for the term “hackie” have ended up clicking most frequently on “hacky sack”, so that is the top result they propose. Previous searchers have evidently also back-tracked and ended up looking for “jackie”s instead, even more so than hunts for people whose name is actually spelled “Hackie” as typed by the user (such as Tupac Shakur’s bodyguard). If you were looking for Jacqueline Kennedy Onassis and had started by typing “hackie”, you would think that Google was genius for suggesting her. If you were looking for “hacky sack”, you probably wouldn’t even notice that “jackie o” was one of your options. Getting you to your destination relies on a few tricks that may involve brute frequency counts (e.g. 47% of “hackie” searches end up at “hacky sack”), some NLP (e.g. “hackie” could be a typo for “hacky” or “jackie”), or even some AI (e.g. “hackie” could be a typo for “hacky” and we have detected a trending site called “Hacky Easter“). The proposal “hackie tupac” clearly appears because previous users had not found the man they were looking for until they used the modifier of his famous employer, thereby using their natural intelligence to train Google.

You can try the magic yourself: do a search for “Clarence Thomas”, then search for “Ruth Bader Ginsberg”, then search for “John Roberts”, and finally search “s”. Do your choices include “Sonia Sotomayor”, “Stephen Breyer”, “Samuel Alito”, and “supreme court justices”? Pretty cool, no? The biggest trick to matching you to the information you are seeking, though, is that your own eyeballs scan the 10 options Google presents, sends those signals to your brain, and your flesh-and-blood neural network processes the options and makes the intelligent decision. When your search fails – when you want to know why the devil is called Lucifer but searching Google for Lucifer only gives you information about a TV show by that name – you simply refine your search to “lucifer devil” and write off the cock-up. Google Search is awesome, and certainly involves increasing use of AI to find patterns that respond to what is on your mind, but that is a far cry from the notion that AI can now, or in the future will be able to, predict your thoughts better than you can yourself.

The brilliant citation software Zotero (https://www.zotero.org) demonstrates the difficulties.18 The task for Zotero is to find information on web pages and pdfs that can be used for bibliographic references. That is, Zotero searches for specific elements, such as title, author name, date of publication, and publisher. The program is aided by frequently-occurring keywords, such as “by” or “author”, and by custom evaluation of the patterns used by major sources such as academic publishers. Even so, Zotero often makes mistakes. For example, sometimes it cannot identify an author who is clearly stated on the page, sometimes it will repeat that author’s name as a second author, and sometimes it will miss one or more additional authors. Zotero assigns itself one job, in one language, and it does that job as well as any user could hope. Nevertheless, it is unable to overcome the vast multiplicity of ways that similar data is presented on similar websites; non-empirically, at least half the references in this web-book needed some post-Zotero post-editing. A next-generation Zotero could conceivably use ML for an AI approach that homes in on recognizing how diverse publications structure their placement of the author names variable, but better resolution of this one feat in English text analysis is akin to landing a probe on a single asteroid as a component of charting the solar system.

Systems are constantly improving to recognize increasingly subtle aspects of English, because the language has enormous data sets and research bodies and corporate interests with extraordinary processing power to devote to the task, but it is crucial to recognize that (a) English is not “language” writ large, but a uniquely privileged case, and (b) analysis of a single language is just the train ride to the airport, while translation is everything involved in getting you into the stratosphere and landed safely across an ocean. The New York Times, speaking of English, states that Google’s “BERT” AI system “can learn the vagaries of language in general ways and then apply what they have learned to a variety of specific tasks,” but then goes on to say they “have already trained it in 102 languages” . Looking at what BERT has actually done for those languages,19 however, shows much less than the comprehensive language data you might expect would aid in the analysis of “the vagaries of language”: Wikipedia articles, which are next to useless as a corpus resource for comparing languages (you can read a detailed side article written to justify this statement) have been scraped and processed with a number of reductionist linguistic assumptions (e.g. no party terms) that invalidate any glimmer of adhering to science. Nevertheless, the New York Times journalist continues to repeat his formulation that “the world’s leading A.I. labs have built elaborate neural networks that can learn the vagaries of language” – conflating the ability to learn from vast troves of English text with the ability to learn any of the languages a bit farther to the right on the steep slope of digital equity. We are still far from AI that comes close to producing English with a speaker’s fluency, or recognizing nuances when a person strays off the text or acoustic patterns our devices are trained on. We know this, and we laugh about it, with funny tweets about Alexa and autocorrect, or this video employing the latest AI from 2022 on one relatively simple linguistic task in English:

Google puts some of the same NLP that it uses for search into English-source GT (e.g. recognizing “French fries” as a party term in some cases), but the service is a long way from interacting with English with a human understanding. What is not done with English is by default not replicated to any of the top tier languages discussed in point 3 above, and most certainly does not trickle down to data-poor or data-null languages. In the words of noted AI researcher Pedro Domingos , “People worry that computers will get too smart and take over the world, but the real problem is that they’re too stupid and they’ve already taken over the world.”

Nobody recognizes the need for good data as essential for good AI, in fact, more than Waymo, Google’s autonomous car division. Waymo has decided that it would be bad for their driverless cars to kill people. As part of the billions they are investing in designing vehicles that can interpret every road sign, reflection, and darting dog, they hire a bureau of Kenyans at $9/day to label each element of millions of pictures taken by their Street View cars . There is nothing artificial about the intelligence of the people who spend their days telling Google what part of an image is a pothole and what is a pedestrian. The AI comes in later, when the processor in a car zipping down the street has to interpret the conditions it sees based on the data it was trained with. It is not such a leap to assert that effective translation is impossible without data similarly procured from natural intelligence. Google hires its Nairobi employees for $9/day to make it safe for Californians cruising to the mall to stare at their phones instead of watching the road. They could easily double their staff and pay $9/day for linguistic data from Kenya’s large pool of unemployed, educated youth to power NLP for Kenya’s 60-odd languages. That they choose not to do so is not an indication that they have mastered AI with their current techniques, but rather that they do not see the investment in reliable translation to yield the same return as investment in non-lethal cars. As long as people believe the myth that AI has conquered or soon will conquer MT based on the current weak state of data across languages, rather than demanding systems that have a plausible chance of working, such mastery will never occur.

“Latest Planes Herald New Era of Safety: With Inventors’ Producing Foolproof, Nonsmashable Aircraft, Experts Say We’ll All Fly Our Own Machines Soon” . Thus read the headline about Henry Ford’s plans in the November 1926 edition of Popular Science magazine, half a year before Charles Lindbergh flew across the Atlantic. Before a brief exegesis21 of why we will never live in a world where flying cars are common transportation, we could amble through the next 93 years of articles promoting this fantasy. Let us land at “You Can Pilot Larry Page’s New Flying Car With Just an Hour of Training” , however, because it unites two themes relevant to MT: a huge investment in a much-hyped technology by a co-founder of Google, and journalists falling for and promoting the hype, hook, line, and sinker. Before continuing, please enjoy this video and the Fortune article about Page’s aircraft, Kitty Hawk. On that line, companies that are not Google have developed substantially better models; the Dutch PAL-V, at $400,000, is a gyrocopter that flies higher and faster, for much longer distances (no information on the noise profile of its 200hp engine), then can have its wings folded in a parking lot and perform reasonably on the road, with energy usage comparable to contemporary vehicles in both air and land modes. Were such machines to become affordable to millions, though, numerous factors would prevent their viability in urban or suburban environments, with acceptability plummeting as lower monetary cost increased uptake. Operating a vehicle in three dimensions requires a skill set that greatly surpasses movement along the ground – for example, constant awareness of safe landing spots and the ever-shifting localized wind conditions needed to take off and land safely. Among the major causes of fatal automobile accidents , all of which are variations of driver error, weather conditions, road conditions, mechanical failures, and encroachments, only a few (running red lights and stop signs, potholes, tire blowouts, sharp curves) would not occur in the air. We can fairly predict that operator strokes and heart attacks will occur at roughly the same rate in the air as on the ground. Drunk flying will occur at about the same rate as drunk driving, without the possibility of police patrols to stop impaired people from operating their vehicles. Meanwhile, the skies introduce many new dangers, such as birds, icing on the wings, insects clogging the pitot tubes essential to determining airspeed and altitude, wind shear, and vehicles approaching from any angle. People will sometimes fail to make sensible decisions, such as how much fog, rain, or wind is too much to abort a trip, or keeping loads balanced and within weight limits, or maintaining adequate fuel reserves. RIP, Kobe Bryant. Mid-air collisions are rare today because planes are few and spaced by miles, with pilots adhering to a variety of protocols. A significant increase in low-altitude traffic, though, with vehicles hopping among random points like popcorn, would produce insupportably dense skies. A fender-bender on the ground is Game Over at 1000 feet. A car with a sudden mechanical problem stops and the passengers go home in a taxi; a gyrocopter with a sudden mechanical problem drops and the passengers go home in a box. Automation would exacerbate the dangers, with myriad people punching buttons in aircraft they did not know how to control in emergencies as simple as a fatigued engine bolt. Has an electrical failure ever caused your phone or computer to shut off? If your avionics shut off in the air, your race to reboot would fare poorly against gravity. On-board sensors would have even more difficulty than human eyes in picking out hazards such as electric wires, flag poles, fences, overhead road signs, rocky landing surfaces, geese, and drones. People will maintain their flying cars with the same diligence with which they maintain their earthbound rides, which is to say they will not keep their fluids topped or have their equipment serviced on tight schedules, much less conduct pre-flight walk-arounds or detailed safety inspections (if they even know what to look for and have access to view critical components). As risky as flying cars would be to the people inside them, they would be a greater terror to people on the ground. Perhaps you’ve heard a book-sized drone buzzing around your neighborhood; increase the engine to a size needed to lift a few humans and their cargo and you increase the noise proportionally (and don’t imagine an electric propellor would be silent); increase the number of vehicles and you’ve got the clamoring cacophony of lawnmowers overhead night and day. What is your risk tolerance for an occasional disabled machine falling through your roof? Or a drowsy or suicidal pilot? When anyone can get a flying car, anyone can drop a homemade bomb on their ex’s house, or unload a vat of acid over a church picnic, then flee without a trace. People toss cigarette butts and McDonalds wrappers out of their cars all the time – now they are small missiles heading straight for your head. Vehicles such as Kitty Hawk could some day see service autonomously airlifting venture capitalists around Silicon Valley. However, they will not and cannot provide to the masses the transportation solution of the future. No matter a century of drool from a gullible press that does not dig even as deeply into the question as this sidebar does. No matter continued headlines such as “We were promised flying cars. It looks like we’re finally getting them” . After all, the dream, which many of us have shared since our childhood fantasies, is put forward confidently by proven innovators like Henry Ford and Larry Page who we want to believe. All this is to drive home a point: artificial intelligence is to translation as the flying car is to transportation: extremely interesting in privileged contexts, infeasible in most.

Artificial Intelligence, Machine Translation, and the Flying Car20

Page’s team has produced a tremendously cool vehicle that would be a rush to fly. It takes off from the water, flies at the altitude of a basketball, moves at the speed of an electric bike, makes the noise of a delivery truck, stays aloft for about as long as it takes to make and eat an omelet, and, could it physically rise above power lines and rooftops, would not legally be allowed to fly over populated areas. And, what should be obvious: it is not a car. It does not take off, land, or taxi on a roadway, it cannot ferry you to work, it cannot carry passengers or groceries, it cannot go out on a rainy or windy day, it cannot fit in a parking space. It is not a car, though “flying cars”, machines that can operate on roads and in the air, will soon exist as a toy for rich people.

Myth 2: Neural Networks Solve Machine Translation22

Picture 29: Bettors predict the winners of horse races based on small statistical variations. People who put their money on 19 of the 20 horses in this picture will lose. Credit

Lest you noticed that previous generations of GT did not quite produce perfect translations, you might be reassured that recent developments in neural networks (NN) have ironed out previous wrinkles, and the sun is rising on flawless universal translation. A 2016 cover story for the New York Times Sunday Magazine , for example, presents the story of GT’s conversion from statistical (SMT) to neural (NMT) machine translation, with the assertion in the opening section that GT had become “uncannily artful”.

In SMT, a lot of data is compared between two languages, and translations are based on imputed likelihoods, like predicting the winner of a horse race based on a set of knowledge about past performance (Picture 29). Much of the challenge of translation arises from the ambiguity wherein a single spelling can mask multiple meanings, the problem of polysemy. Assume, for example, that “spring” in English is found to correspond to “primavera” in Portuguese in 40% of parallel texts, and corresponds to terms matching six other senses (bounciness, a metal coil, water flowing from the ground, elastic force, stretchiness, a jump) at the rate of 10% each. As the plurality sense, SMT will offer the translation pertaining to the season much more than 40% of the time, because it is four times more likely than any of the others individually.

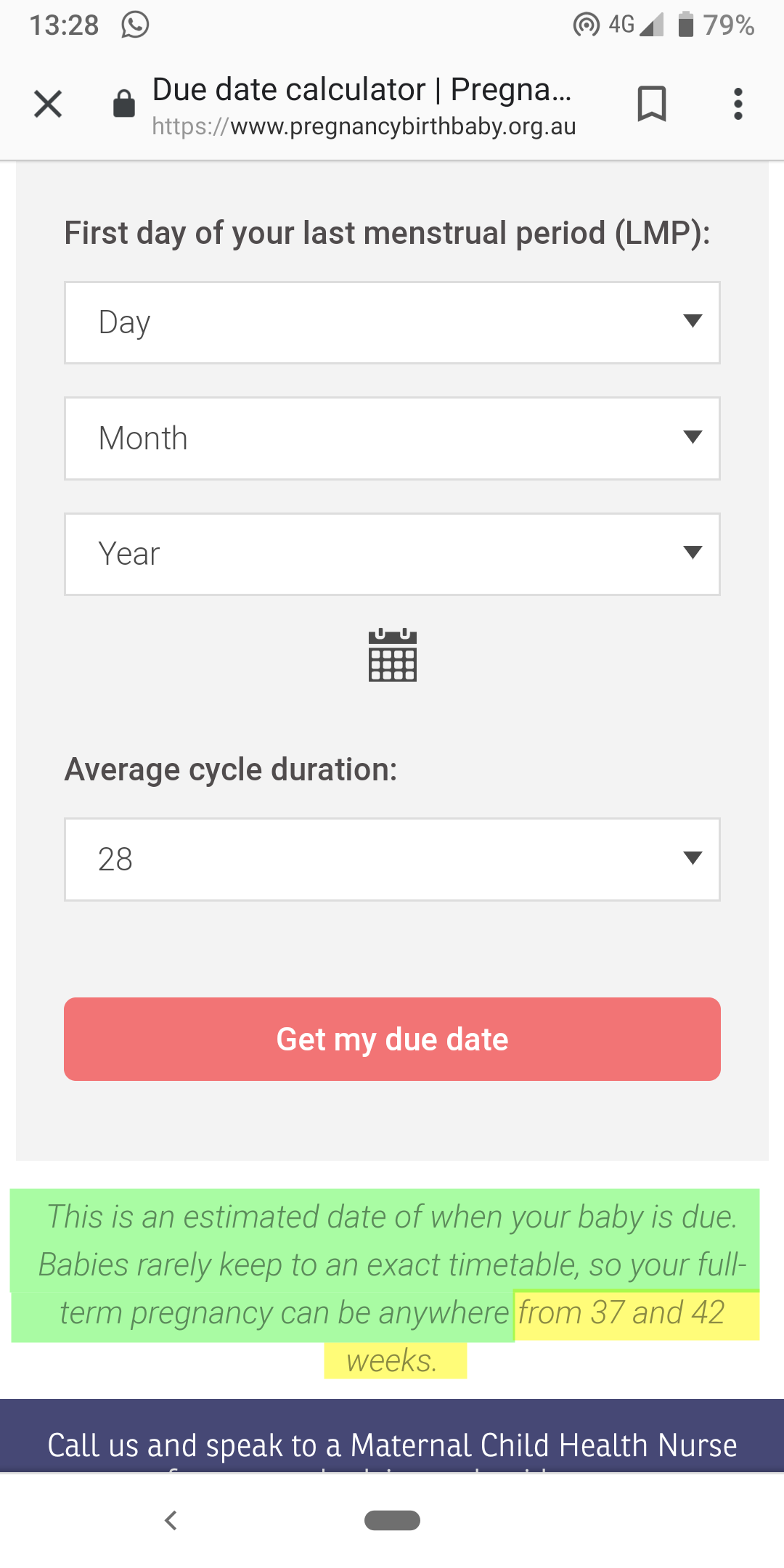

Picture 29.1: An honest pregnancy due-date calculator that stresses the weakness of its statistical prediction. By contrast, although basing its predictions on exactly the same algorithm, mamanatural.com woos parents to its site by claiming in the metadata optimized for Google search results that they have an “Amazingly Accurate Pregnancy Calculator”.

Let’s look at a non-linguistic example of using statistics to make predictions. Babies are notoriously poor at scheduling their emergence from the womb. Natural births usually occur within a one month range, the mid-point of which is about 280 days after the mother’s last menstrual period (which itself is rarely marked on a calendar). About 4% of babies, or one in twenty-five, are actually born on the date projected by the algorithm. My own daughter was born two weeks after the “due date”. Had we booked flights for her grandmother to be in the hospital for the birth based on that statistical guess, my mother-in-law would instead have spent many days watching us trying to induce labor by playing beach paddle ball in our local lake, and been home before the first contractions kicked in. There is a scientific basis for proclaiming that a baby will be born somewhere around a given date – but the wise and honest emphasis should be on the “somewhere around”, done well as shown in Picture 29.1. For translation, SMT makes similar guesses that are only sometimes, like race predictions that have some additional information about the horses and jockeys, based on more sophisticated algorithms.

NMT, by contrast, infers connections based on the other words embedded in the “vector space” surrounding a term. Vector space, which sounds impressively mathy, is used in MT to describe the mapping of different words and phrases in a corpus to capture their degrees of similarity . Theoretically, NMT should recognize that, when mountain and spring appear together, “spring” likely pertains to the sense of flowing water. GT’s self-professed “near perfect” NMT Portuguese produces the erroneous “primavera de montanha” when given “mountain spring” by itself, but with tweaking (collocation with the word “water” is necessary but not sufficient), a sentence can be found that generates the correct “nascente”, demonstrating that NMT can improve on statistics: “A água flui da nascente da montanha” when shown the context “Water flows from the mountain spring”. The guesses that NMT makes when mapping its vectors, though, are often wildly erratic. DeepL, for example, intuits that “Regards” is the closing of a letter, compares that to a French closing from some official letter in the EU archive, and proposes “Je vous prie d’agréer, Monsieur le Président, mes salutations distinguées” (see Picture 68). GT recognizes that 习近平 is the Chinese president Xi Jinping, finds text about presidents, and translates the name as Эмомали Рахмон in Kyrgyz – that being Emomali Rahmon, president of Tajikistan.

In cases where two languages have substantial parallel data, and the domain is well treated in the corpus, results can be astounding. This can be seen in action with DeepL’s spot-on translation of “his contract came to an end” to French, correctly using the non-literal “prendre fin”; using Linguee to view the data on which DeepL (discussed in this sidebar) is presumably based, one can see numerous examples where a contract “came to an end” in English and “a pris fin” in French (mot-à-mot “has taken end”). In GT, the English phrase “easy as pie” is improperly verified 🛡 “c’est de la tarte” for French, but given the correct colloquial translation “simple comme bonjour” in some, but not all, longer test sentences.

I recommend reading “Understanding Neural Networks” for a somewhat approachable overview of the computer science involved. At the nub of Yiu’s explanation: neural networks are models of a complex web of connections (and weights and biases) that make it possible to “learn” the complicated relationships hidden in our data. Basically, the computer does a lot of checking of some elements A, B, C, and D in comparison to other elements W, X, Y, and Z, and patterns often emerge. There can be a lot of layers – A, B, C, and D are first checked against E, F, G, and H, and then those results are checked against I, J, K, and L, and then onwards toward W, X, Y, and Z and beyond. The more layers in a system, the “deeper”, and thus the name “deep learning”.

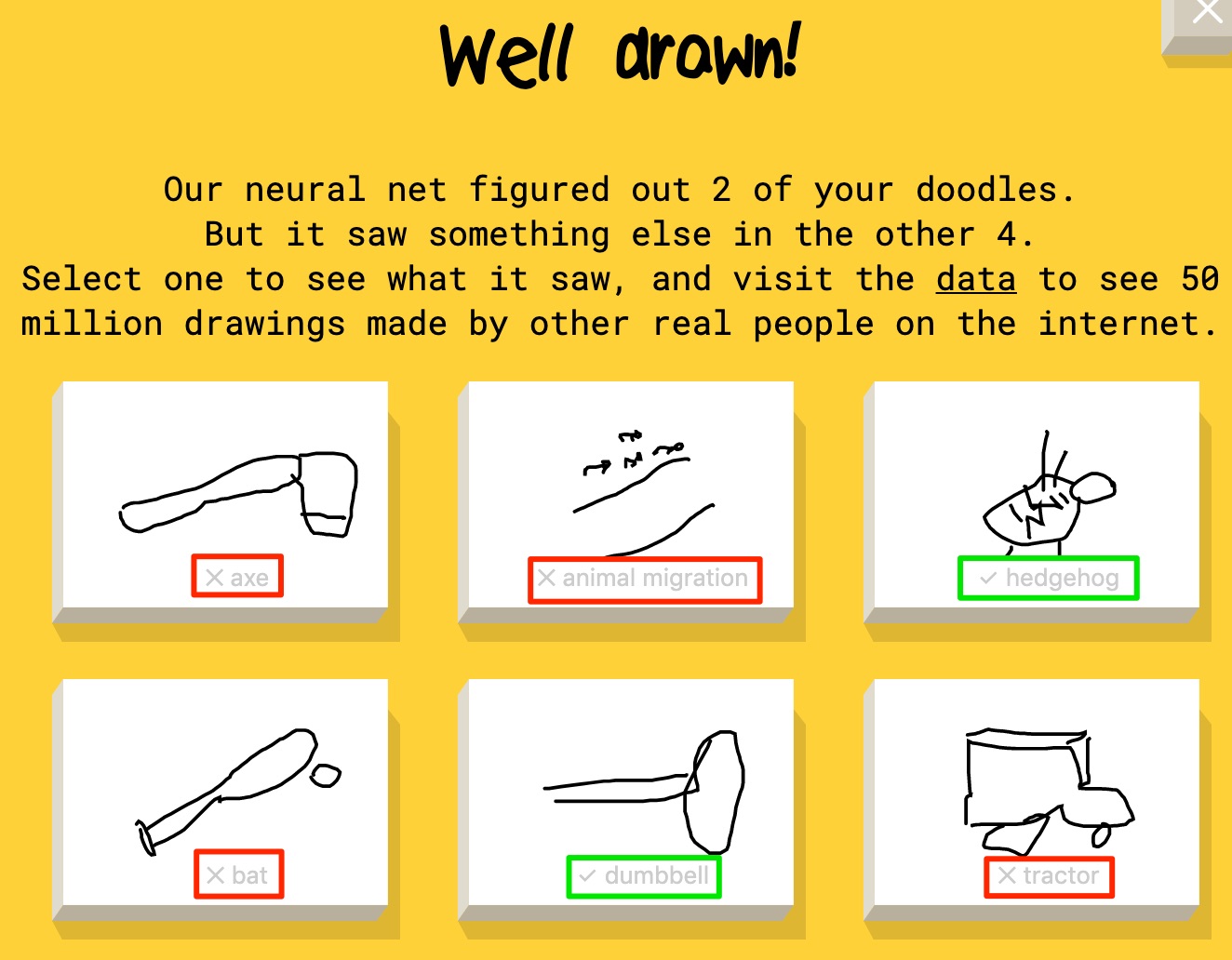

Picture 29.2: The Quick Draw neural network experiment, https://quickdraw.withgoogle.com.

A fun neural network experiment called Quick Draw (sponsored by Google) that invites your participation gives an indication of both the strengths and limitations of what neural networks can achieve. Players are given a term and 20 seconds to sketch it on their device. (High art is not expected, or possible.) Picture 29.2 shows some results: the program recognized my “dumbbell” before I had a chance to complete it, based on thousands of other people taking a very similar approach to the same prompt. It also recognized “hedgehog” before the clock ticked over, though I myself would not guess the result from my own drawing. However, it did not recognize “bat” because I drew “baseball bat” instead of the flying mammal it was expecting, and it did not recognize “axe” or “animal migration” although I suspect many human readers will discern the terms from the doodles. (It also did not recognize my “tractor”, but who would?) The game is fun for the whole family, and sometimes the program will correctly glean all of your drawings. The first point here is that a neural network working on a limited data set of a few dozen terms, with more than 50 million data points and counting, is still highly constrained in the inferences it can make. Neural networks can discern a lot of patterns that machines cannot otherwise recognize, but they can only dive “deep” to the extent that they have lots of clean data to learn from. A second point is that a neural network could not have guessed any item I drew outside of its training set – for example, had I drawn what I intended as a cowboy hat, it would have guessed “flying saucer”, because only the latter is within its data universe.

In certain circumstances, NMT thus offers a marked improvement over phrase-based SMT. Toral and Way measure this for three literary texts translated from English to Catalan, and give the level-headed comparison that sentences attaining human quality rose from 7.5% with SMT to 16.7% with NMT in one text, 18.1% to 31.8% for a second, and 19.8% to 34.3% for a third, concluding, “if NMT translations were to be used to assist a professional translator (e.g. by means of post-editing), then around one third of the sentences for [the latter two] and one sixth for [the first] would not need any correction.” This study went to great lengths to stay within the lanes of reporting on a single language with rich data (more than 1.5 million human-translated parallel sentences), and reached the notable conclusion “NMT outperformed SMT”. It was puffed by the tech media cotton candy machine, though, into “Machine Translates Literature and About 25% Was Flawless” .

Ooga Booga: Better than a Dictionary23

Nota bene: because neural machine translation (NMT) is based around the context in which words appear, it can offer no improvements to statistical machine translation’s (SMT’s) horse-race guesses for the 89% of users who improperly attempt to use a machine translation (MT) service as a bilingual dictionary.

A window into how NMT performs as a dictionary comes from translations of the nonsense phrase “ooga booga” from all 107 languages to English.

In 82 cases, NMT does not find a friend, so the MUSA mandate to supply a word, any word, repeats “ooga booga”. If Google is giving us “better than human” translations , these results must be “better” than a dictionary. After all, no dictionary would be bold enough to include “ooga booga” in any of those languages.

Another 8 cases transform the capitalization and/or the number of o’s and g’s in various ways (when delivering mass in Latin, for example, the Pope apparently considers “Ooga Booga” to be a proper noun). And then things get weird.

For 5 languages, the neural networks found independent translations to English (with Urdu helpfully proposing that the purported English term be read in Urdu script).

But for 16 languages, the neural machine translations form into geographic clusters. The three Baltic states and distant Hungary all change “booga” to “boga””; Latvian and Lithuanian are about as close to each other as English is to Dutch, but unrelated to the other two, which are distant Finno-Ugric cousins of each other. The three Celtic languages around the Irish Sea go all downward-facing dog, though “ooga yoga” is the popular style in Ireland while “soft yoga” is the rage in Scotland and Wales. I posit that the neural net has transmuted “booga” to “blog” and then elaborated on some word embedding for three unrelated African languages from north of the equator (two spoken in Nigeria). The six African languages from south of the equator are the biggest puzzle – they are all Bantu languages with sparse parallel data versus each other and versus English, so perhaps GNMT mixes them all in the same blender in an attempt at enhanced results?

I can only report the odd way that Google’s neural networks performs in their simulation of a dictionary. I cannot begin to explain. I can only suggest that we do “ooga booga” as any good Tamil speaker would: Go speculative!

Africa middle latitudes (except Hausa)

Amharic: look at the blog

Igbo: look at the blog

Yoruba: look at the blog

Africa southern latitudes

Chichewa: you are in trouble

Sesotho: you are in trouble

Shona: you are in trouble

Swahili: you are in trouble

Xhosa: you are in trouble

Zulu: you are in trouble

Baltic + Hungary

Estonian: ooga boga

Hungarian: ooga boga

Latvian: ooga boga

Lithuanian: ooga boga

Celtic languages

Irish: ooga yoga

Scots Gaelic: soft yoga

Welsh: soft yoga

Two South Asia + one Baltic + Latin

Belarusian: Ooga booga

Kannada: Ogga Boga

Latin: Ooga Booga

Telugu: Oga Boga

Four South Asia + one middle Africa

Bengali: Booga in the era

Gujarati: Grown bug

Hausa: on the surface

Tamil: Go speculative

Urdu: وگا بوگا

The research that Google presented in correlation with the launch of GNMT (Google NMT) assigns a specific numerical value to this improvement. “Human evaluations show that GNMT has reduced translation errors by 60% compared to our previous phrase-based system on many pairs of languages: English↔French, English↔Spanish, and English↔Chinese” (Wu et al – read this article for the definitive mechanical overview of GNMT). Without fixating on “many” being defined as 3 out of 102 in each direction in relation to English, do note that a) all three of these high-investment languages are in the top tier in my tests, and b) not a word is said about non-English pairs. Not Urdu, not Lao, not Javanese, not Yoruba, not any testing reported in any down-market language. Not Afrikaans nor Russian where results for formal texts might surpass the study languages. There is no scientifically valid way to extrapolate the findings in Wu to any GT language that has not been tested. That is, the blanket conclusion that GNMT produces “better” results than previous models when comparable corpus data is available is likely correct, but Google’s research does not support a blanket “60%” claim for the 99 languages within GT at that time. Nevertheless, a great many academics now take the plausible-sounding claim in a peer-reviewed publication at face value as applying across the board.24

Even were that level of improvement to be consistent across languages, most of the languages in the system, as measured in this study, began at a much lower starting point, begging the baseline question “60% better than what?” Nevertheless, the headline the public sees erases any nuance in interpreting what Wu legitimately shows, blaring “Improvements to Google Translate Boost Accuracy by 60 Percent” . So, NMT (or what my testing indicates inconclusively to be a hybrid deployment using SMT for short phrases) is better than SMT by itself, and in some cases produces Bard-like results. This is encouraging for resource-rich languages, leading to the recommendation that you use GT, with caution, in certain advised situations. But there is no plausible research underlying headlines about GNMT such as “Google’s New Service Translates Languages Almost as Well as Humans Can” .

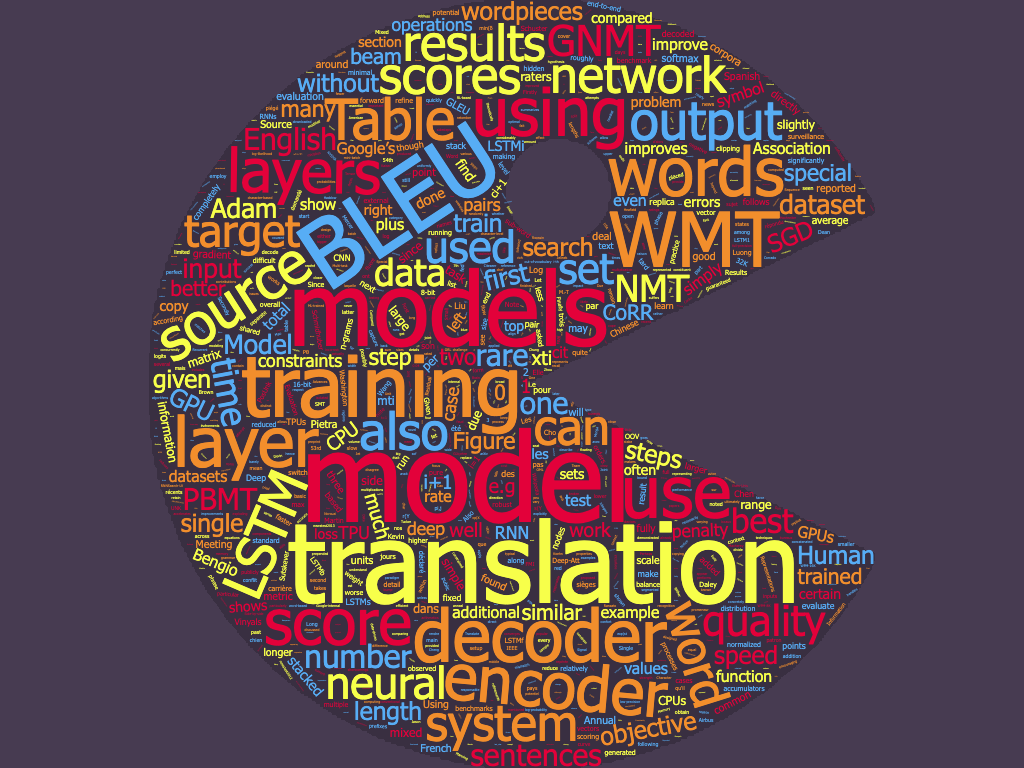

The manner in which this news has been extrapolated leads us back to the flying car. Hofstadter notes “verbal spinmeistery” beneath use of the term “deep” to suggest “profound” instead of its technical signification of “more layers” of processing than older networks. For example, a second New York Times Magazine cover story about AI expounds, “Deep neural nets remain a hotbed of research because they have produced some of the most breathtaking technological accomplishments of the last decade, from learning how to translate words with better-than-human accuracy to learning how to drive” . Read that again: in a major article, the US “newspaper of record” rhetorically ratcheted NMT from “near perfect” all the way to “better than human”. Google’s own research , far from “better-than-human” results, reports, in conjunction with the milestone achievement of all 103 languages then incorporated into GNMT, an average BLEU score of 29.34 from English to its top 25 languages, 17.50 for its middle 50, and 11.72 for its bottom 25 (they do not share disaggregated data) – numbers that very much adhere to the empirical findings of TYB. It is the journalistic spin, not the MT results, that should leave you gasping for breath.

When people repeatedly hear plausible-sounding hyperbole echoed by sources they trust, those statements become confirmed truth in the public mind, even among ICT professionals who then trumpet that fake news in bars, interviews, and conferences. A translation professional informed me of a client who went so far as to reject human translations on behalf of a major European airline because the results did not look like the output from the alleged authority of Google Translate. A little bit of knowledge is a dangerous thing, or, after that aphorism being translated by a bilingual person to Japanese, 生兵法は怪我の元, is “60% better” reverse translated by GT to say, “The law of living is the source of injuries.”